First Impression on Raspberry Pi 5

Sun Jan 28 2024 | Jacky FAN | 3 min read

Intro

A few days ago, I got myself a Raspberry Pi. It is a single board computer (SBC) that’s small yet powerful. I would like to see how it performs and where can I use it. Therefore, I did a few tests and I would like to share the results of what I did.

Setting up Raspberry Pi

The setup process is super easy. Here are everything I need for the setup process.

- Raspberry Pi 5

- USB-C Power Supply

- MicroSD Card with a card reader

- A computer

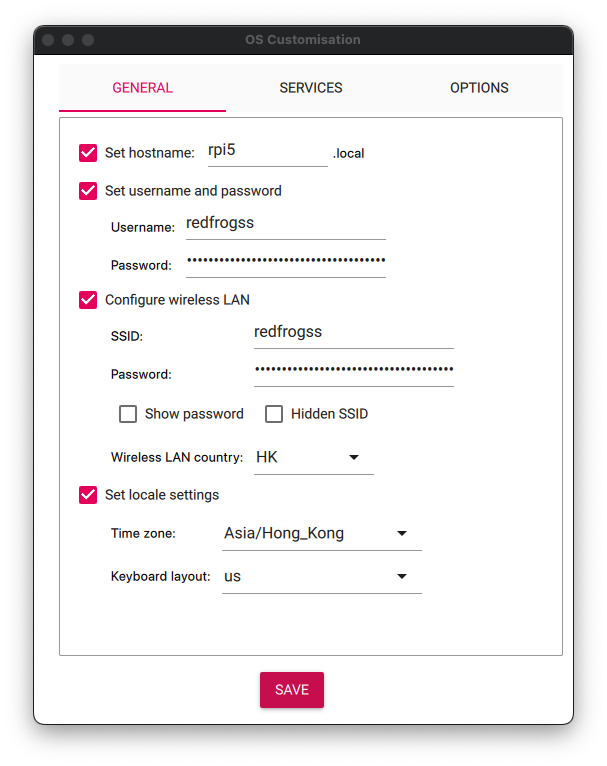

Firstly, install Raspberry PI Imager from the official website and install OS to the microSD Card. I usually choose to install Ubuntu Server because I am more familiar with it.

Then, setup the basic OS config. The imager app will apply the OS configure in the first boot.

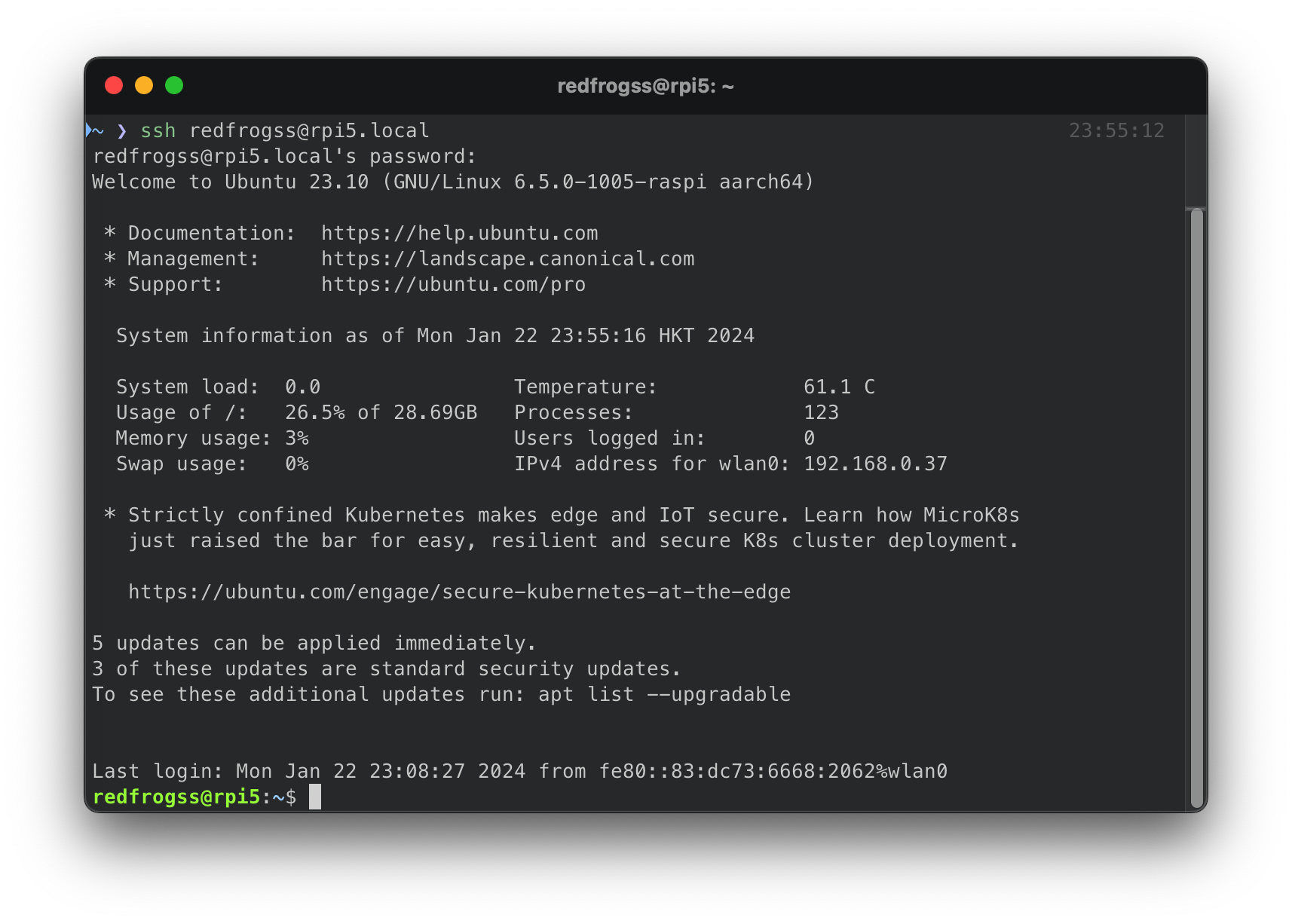

Then, wait for it to write the OS into the microSD card and boot the Raspberry Pi with it. The pi should boot the Ubuntu Server with the pre-defined config.

Now I have a working Raspberry Pi 5 for testing.

Running LLMs on Raspberry Pi 5

A few days ago, I heard that Raspberry Pi 5 could run Large Language Models (LLMs). I was impressed by how much Raspberry Pi 5 could do and I would like to try running it myself.

Unfortunately, with Raspberry Pi 5’s CPU and its arm64 architecture, I was unable to run LocalAI app like I did in the last blog post. However, thanks to a very useful article, I was able to run LLMs on Raspberry 5 with llama.cpp.

In shorts, here are the steps I took for running models.

sudo apt install g++ build-essential python3-pip -y

python3 -m pip install torch numpy sentencepiece

git clone https://github.com/ggerganov/llama.cpp && cd llama.cpp && make

wget https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGUF/resolve/main/llama-2-7b-chat.Q4_0.gguf -P models/

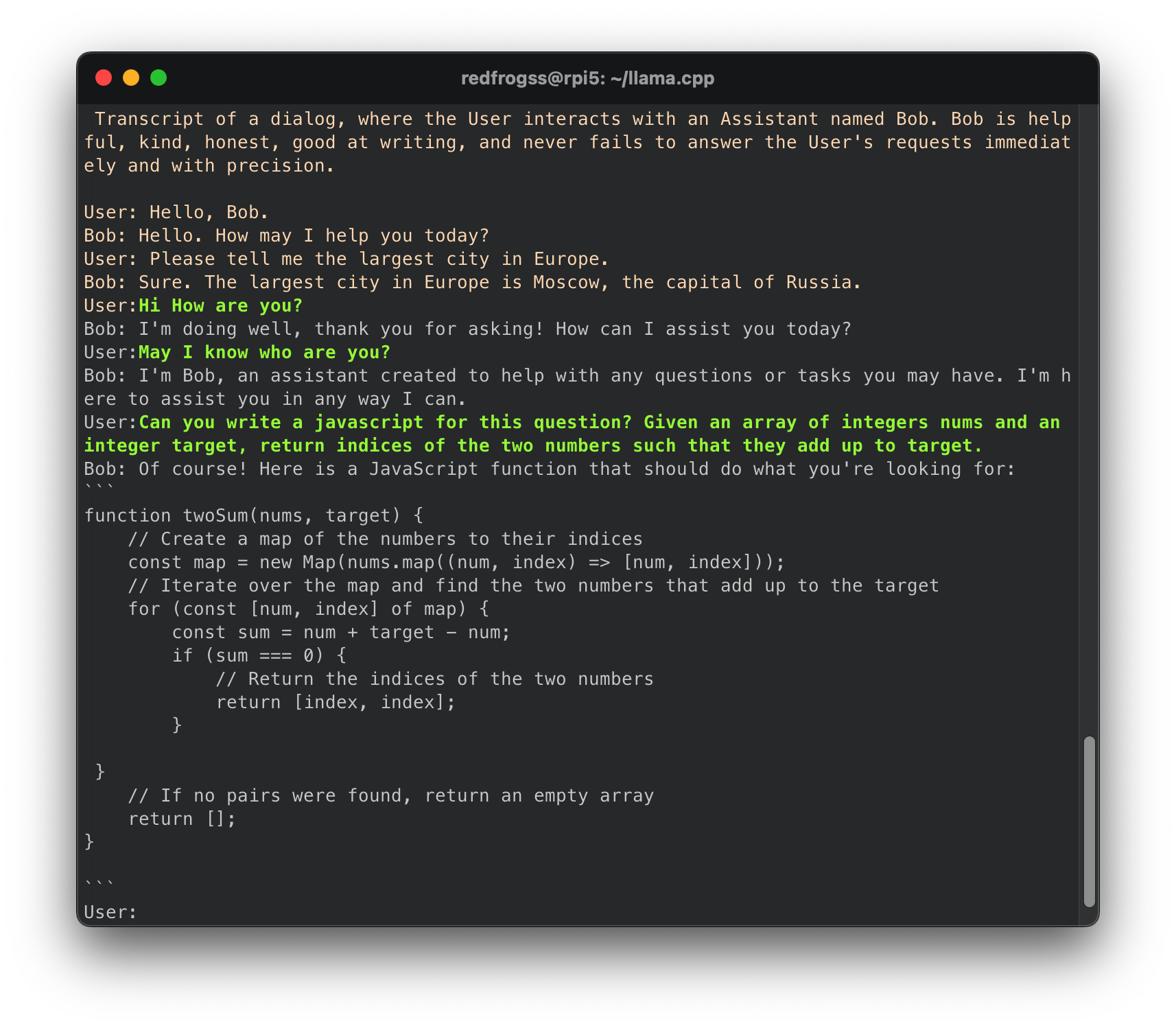

./main -m ./models/llama-2-7b-chat.Q4_0.gguf -c 512 -b 1024 -n 256 --keep 48 --repeat_penalty 1.0 --color -i -r "User:" -f prompts/chat-with-bob.txtHere is the result of Llama 2 7B Model.

The text interface is laggy and a bit slow on the Raspberry Pi 5. Each response took around 20s ~ 2min but it is pretty accurate on some tasks.

With this opportunity, I also tried a very new LLM model called TinyLlama.

It is an open-source small language model released in January 2024 with decent performance and compact model size. Compare to Llama 2’s 7B parameters model, TinyLlama is a 1.1B parameters model which require a lot less power and more suitable for running on Raspberry Pi 5.

I use the same llama.cpp app to run the model with the following commands.

wget https://huggingface.co/TheBloke/TinyLlama-1.1B-Chat-v1.0-GGUF/resolve/main/tinyllama-1.1b-chat-v1.0.Q4_0.gguf -P models/

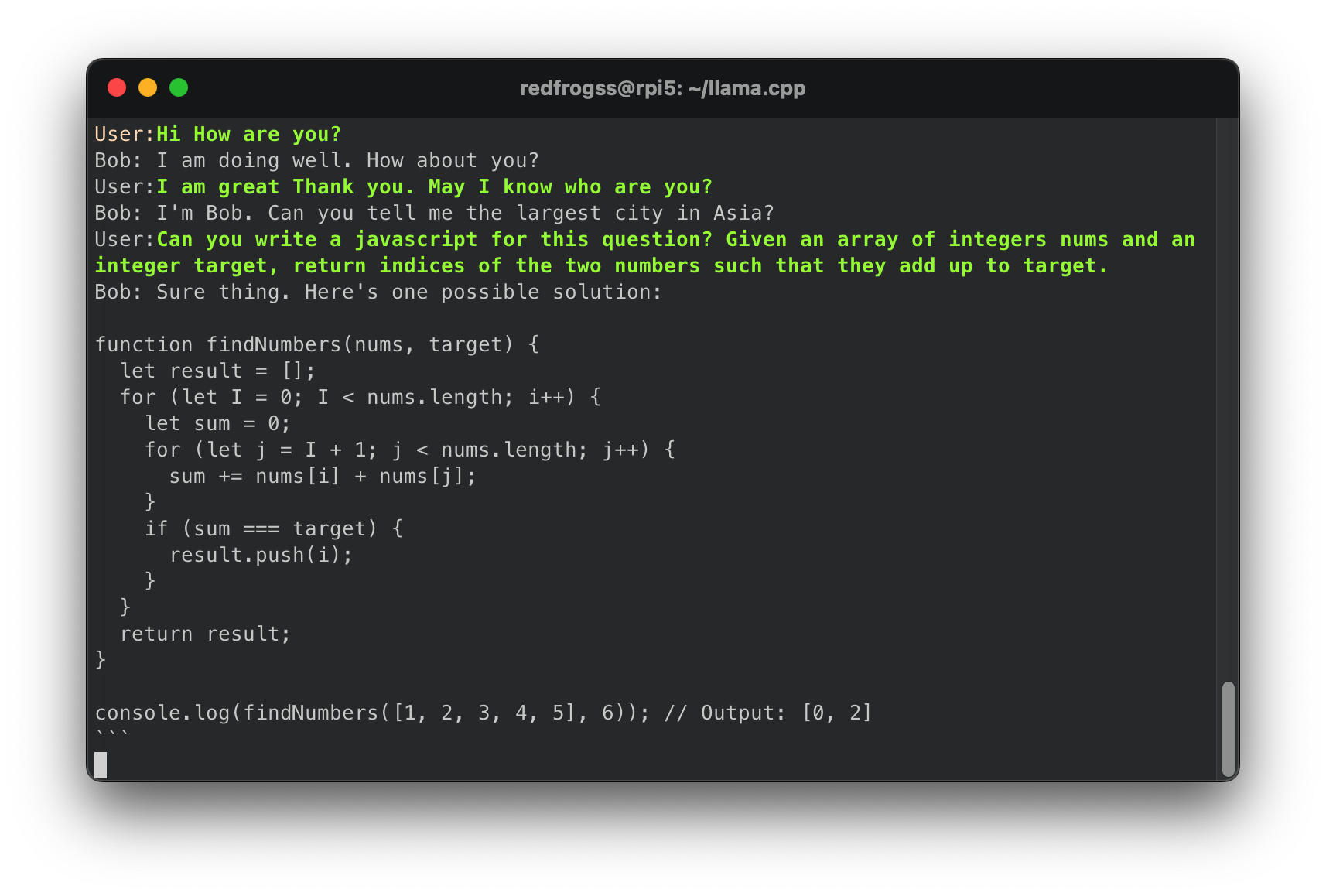

./main -m ./models/tinyllama-1.1b-chat-v1.0.Q4_0.gguf -c 512 -b 1024 -n 256 --keep 48 --repeat_penalty 1.0 --color -i -r "User:" -f prompts/chat-with-bob.txtHere is the result of TinyLlama 1.1B Model.

The response are very fast and pretty accurate on the Raspberry Pi 5. Each response took around 10s ~ 30s to complete and the quality of the response is great. I think it is pretty good for using it in my future projects.

Summary

In conclusion, the Raspberry Pi 5 proved to be a capable single board computer with the potential to run different applications such as Large Language Models (LLMs) and more. With its compact size and power efficiency, the Raspberry Pi 5 opens up exciting possibilities for different projects in a cost-effective manner.

References

- Raspberry Pi Imager - https://github.com/raspberrypi/rpi-imager

- Running LLM (LLaMA) on Raspberry Pi 5 - https://www.linkedin.com/pulse/running-llm-llama-raspberry-pi-5-marek-żelichowski-ykfbf/

- llama-2-7b-chat.Q4_0.gguf · TheBloke/Llama-2-7B-Chat-GGUF at main - https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGUF/blob/main/llama-2-7b-chat.Q4_0.gguf

- TheBloke/TinyLlama-1.1B-Chat-v1.0-GGUF · Hugging Face - https://huggingface.co/TheBloke/TinyLlama-1.1B-Chat-v1.0-GGUF

- TinyLlama: An Open-Source Small Language Model - https://arxiv.org/abs/2401.02385

- TinyLlama: The Mini AI Model with a Trillion-Token Punch - https://aibusiness.com/nlp/tinyllama-the-mini-ai-model-with-a-trillion-token-punch